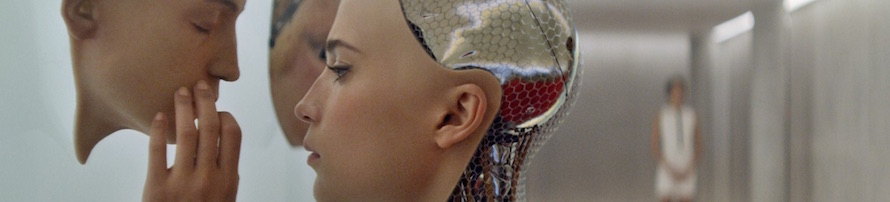

Minds, Robots, and the End of Humanity (FYS-IFS-G)

Course Objectives

We will read brief essays, news items, and a few journal articles related to the development of artificial intelligence (A.I.), and robotic A.I. in particular. While this topic might appear as overly narrow or technical or in the land of fantasy or science fiction, we will be focusing primarily on things that exist right now or will in the very near future, and which will effect all of us in potentially drastic ways. These issues are not irrelevant nor can they be safely ignored.

Apart from that, our consideration of these materials is primarily in pursuit of the primary goals of the course, namely, to develop your writing skills and your analytical and critical thinking skills.

My own background is in philosophy (I am a Kant scholar), so I will be reading much of this literature alongside you as an educated and curious lay-person. Advanced math or programming skills are most welcome, of course, but they are not required in this class.

Nearly all the texts that we will read are aimed at a general, educated audience. If you find them difficult to read, now is the time to ratchet up your effort and focus your attention. This is the class where such re-adjustment is supposed to happen, and much of my job is ensuring that everyone gets up to speed for college-level reading, writing, critical thinking, and research. It will be in the context of investigating these issues of A.I. and the human mind that you will develop these skills.

Main themes for the course

All Watched Over by Machines of Loving Grace

I like to think (and

the sooner the better!)

of a cybernetic meadow

where mammals and computers

live together in mutually

programming harmony

like pure water

touching clear sky.

I like to think

(right now please!)

of a cybernetic forest

filled with pines and electronics

where deer stroll peacefully

past computers

as if they were flowers

with spinning blossoms.

I like to think

(it has to be!)

of a cybernetic ecology

where we are free of our labors

and joined back to nature,

returned to our mammal

brothers and sisters,

and all watched over

by machines of loving grace.

The nature and meaning of artificial intelligence (A.I.). Being able to discuss A.I. requires that we first gain some clarity about what we mean by ‘intelligence’. Are thinking and consciousness such that a conscious machine or a thinking machine is even possible?

Economic Worries. Apart from whether computers and robots ever gain consciousness, they have and will continue to take over a great many jobs previously performed by human beings. Technological advances have been displacing workers since the beginning of technology, but the effects have been especially severe since the early industrial period in eighteenth-century Europe, beginning in England where the Luddite movement first emerged. Our new displacement from the robotization of the work-place has already been felt, especially in the automotive industry, and the rise of A.I. may soon leave very little for human beings to do — as argued, for instance, by Martin Ford in his recent book, Rise of the Robots (2015).

Creators and Creations. The idea of mechanical intelligence goes all the way back to the ancient Greeks. At first it was just the gods who could construct such a thing (examples of which we find in Homer’s Iliad, Hesiod’s Works and Days, and Ovid’s Metamorphoses), but Mary Shelly’s Frankenstein (1818), in the wake of recent discoveries in the natural sciences, brought a new twist to our idea of creating artificial life.

Philosophical questions about consciousness and personhood. What is consciousness, and what is its relationship to the brain? How are the mind and the brain related? Is a person just a kind of physical organism?

Moral issues. What moral obligations, if any, do creators have to their creations? If we manage to create beings that (who?) are self-aware, are we still allowed to own them and to force them to do our bidding? Are we morally obliged to future human generations to continue this research? Or should we stop this research, given the possible dangers of it growing beyond our control? Assuming that we will eventually succeed in creating so-called super-intelligent computers, is it possible also to program a kind of moral code into them? Or will such computers spontaneously develop a morality comparable to human morality?

A.I. as dangerous. Hollywood movies warning us of the dangers of artificial intelligence have been a fixture of cinema ever since the silent film era, and before that in literature, but just in the last year we have heard warnings from responsible experts in the field who, presumably, are in the best position to know about the possible dangers: Elon Musk, Bill Gates, Stuart Russell, and Stephen Hawking (not a computer scientist, but a bright physicist all the same), to name a few. A book that Gates urges everyone to read is by the philosopher Nick Bostrum: Superintelligence: Paths, Dangers, Strategies (2014). See his recent TED talk: “What happens when our computers get smarter than we are?” (March 2015).

By the end of the course you will have:

(1) become a stronger and more self-confident writer, no matter how good you already were;

(2) developed your skills for critically reading a text and evaluating arguments and beliefs; and

(3) become acquainted with some of the central concepts and current issues surrounding the social implications of A.I., robotics, and the philosophy of mind.

Some virtues to bring with you into the philosophy classroom:

humility when comparing your beliefs with those of others;

patience for listening closely to views that seem foolish or misguided to you;

courage to advance in the face of adversity what seems to be the correct view;

endurance for following arguments to their conclusion;

humor for those moments when you feel the utter futility of your efforts.

Manchester University // Registrar // Department of Philosophy and Religious Studies // Last updated: 15 Jul 2015